| Laura Gregg | Jerry Dotson |

| Wizard Textware | Director, Technical Education |

| 10445 NE 15th St | AT&T Wireless Services, Inc. |

| Bellevue, WA 98004 | 14520 NE 87th Street |

| Redmond, WA 98052 |

The Technical Education group at AT&T Wireless Services, with the assistance of an equipment vendor, developed a certification program for network operators. The program produced 24 operators with documented skills to specified performance standards in network monitoring, troubleshooting, and administration. A key component in program success was task-based on-the-job training.

Certification, DACUM, performance technology, telecommunications, training

In response to the increasing size and complexity of the cellular telephone network, AT&T Wireless Services reorganized its network operations by moving important network monitoring and troubleshooting functions from the almost 100 network elements (switches, signal transfer points, digital cross-connect systems, and service control points) to four regional Network Operations Centers. The Network Operation Centers provide coordinated management of cellular network events 24 hours a day, seven days a week.

AT&T Wireless Services staffed the Network Operation Centers with new hires, specialists willing to move from the network element function to the Network Operation Center, and other employees with varying levels of skill in network operations.

The problem the company faced was that few individuals were skilled at monitoring and troubleshooting all network elements. A further complication was created by the fact that the AT&T Wireless Services network was created by the purchase of several regional markets across the country, each with its own way of monitoring and maintaining its network. As a result, there were few best practice standards used by all the Network Operation Centers, and service quality varied from region to region. These operating differences also made it difficult to move operators from one region to another, which is often required during major regional outages or other changes in network operations.

The challenge was to build quality into the on-the-job performance of the advanced network operators so that they could consistently and reliably monitor and troubleshoot the wireless network. AT&T Wireless Services, in cooperation with one of its equipment vendors (Ericsson Inc., 1996) set out to develop a training program that would result in an initial group of 24 advanced Network Operation Center operators whose skills could be certified to a defined level of on-the-job performance.

Certification offered the following advantages to the company:

In addition, we found that the development process, of necessity, improved communication among the company's operations, engineering, and support groups, and resulted in well-defined and developed processes.

Certification also offered the following advantages to employees:

The certification program had five major components:

The most powerful components of the program are the job and task analyses and the structured on-the-job training. The job and task analyses provide role clarity and procedural standardization. In fact, these analyses, whether or not they end in a certification program, are effective ways to define an organization's expectations about a job (what the job is), and to produce standard procedures for performing the job (how the job is done).

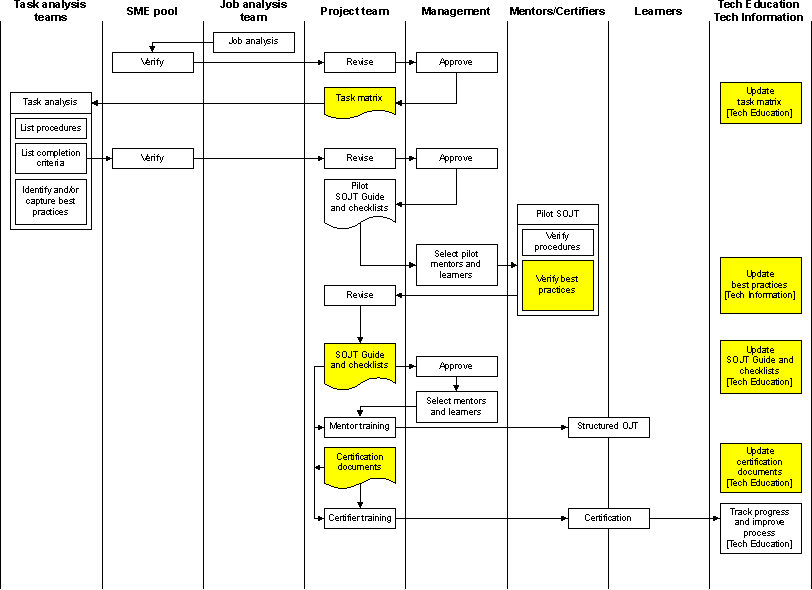

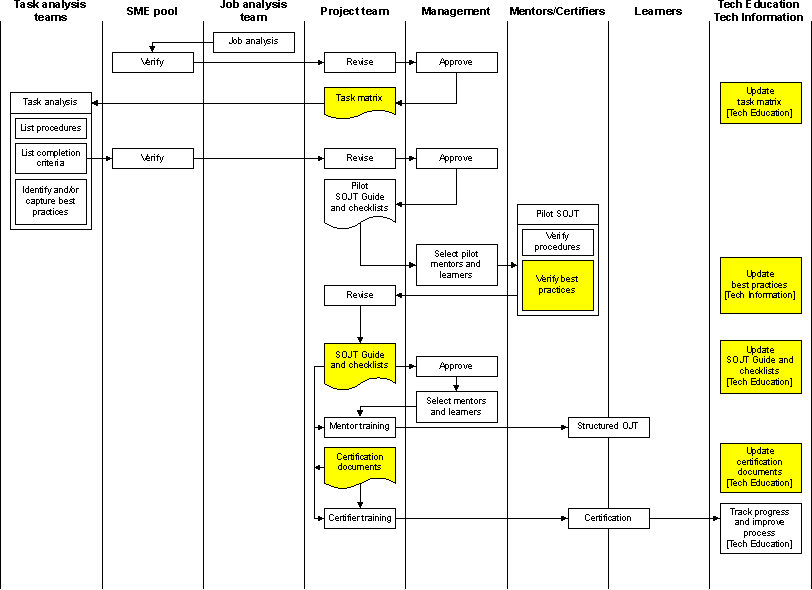

The structured on-the-job training provides a system for training people, using a one-on-one, mentor/learner model, which focuses clearly on the whats and hows of the job in a way that everyone-managers, mentors, and learners-can objectively observe and measure. The classroom training and experience support a person's ability to perform the tasks, but the greater part of the certification process evaluates actual task performance based on the structured on-the-job training. Figure 1 shows an overview of the certification program development process.

Figure 1. Overview of a typical certification program development process

Program development took place in eight stages:

To generate a list of duties and tasks, we used the DACUM process (Adams 1975). Essentially, this process is structured brainstorming during which a group of people (the panel) with the required knowledge about a job meet in a facilitated two-day session. In its purest form, the panel is made up of individuals who are currently working in the job. We have found, however, that this robust process can be equally effective using other kinds of panels, for example:

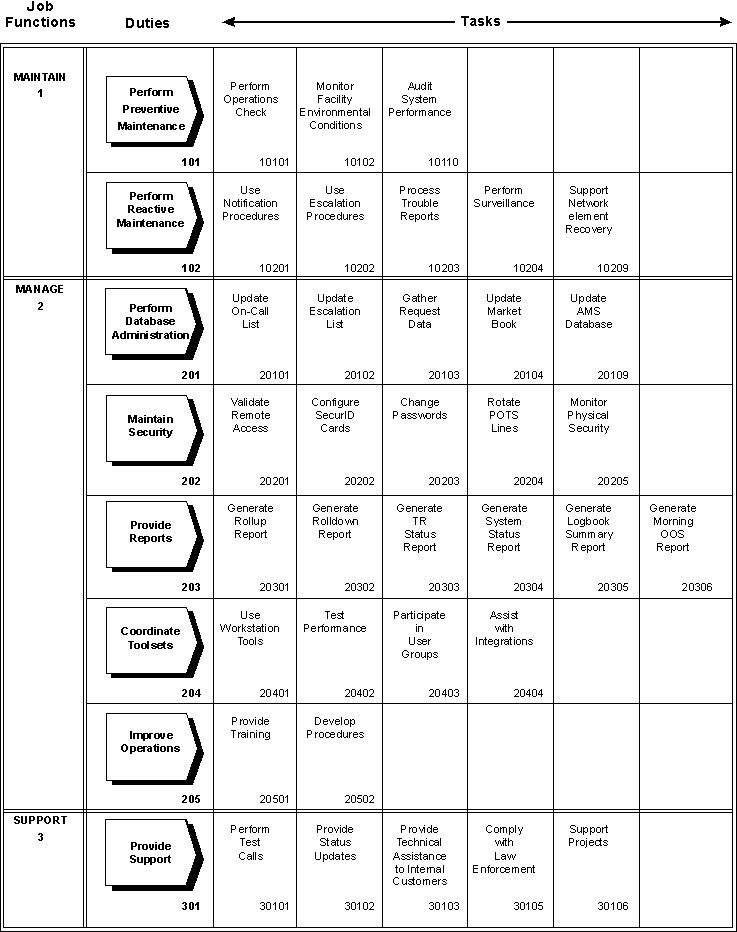

Because we did not have a group of experienced individuals working in the job of Network Operation Center operator, we used the first variation. Panels of three to six subject matter experts for each of the network elements defined the functions, duties, and tasks required to monitor and troubleshoot the network element. An additional panel of Network Operation Center managers defined a set of general functions, duties, and tasks that were not specific to any network element, but were required by the advanced Network Operation Center operator's job. Figure 2 shows the resulting task matrix for the general Network Operation Center tasks. The task matrix shows what has to be done.

Figure 2. Task Matrix for General Network Operation Center Tasks

We then submitted the task matrix for each network element to a larger pool of subject matter experts for revision and approval. They added tasks they felt were missing and refined some task statements for clarity.

We found it relatively easy to arrive at consensus about what needed to be done for each network element. Finding agreement on how the tasks should be done was more difficult. Complex systems that support 24-hour customer demands often require ad hoc responses, even for what might be considered routine tasks. Furthermore, given the "patchwork" nature of the enterprise, procedures varied from market to market. To build commitment to procedural standardization where necessary and appropriate, the subject matter expert pool consisted of individuals whose skills were recognized and respected by managers and co-workers. It was agreed up front that the procedures these experts selected would be standards acceptable to everyone.

For each task, two subject matter experts from the pool met with a technical writer specially trained to elicit and document clearly defined, measurable procedural steps. Each step was accompanied by a completion criterion and a reference to a controlled document containing the best practice. The resulting training document, called a Structured On-the-Job Training Guide, both summarizes and points to details about how the task is to be done.

In some cases, the references pointed to best practices described in controlled documents provided by a network element vendor or to existing documents produced by the AT&T Wireless Services Technical Information group. In cases where there was no controlled document describing a best practice, the subject matter experts created one.

For undocumented best practices, the subject matter experts brought whatever source material he or she could find for that task or procedural step. As might be expected, many individual technicians, Network Operation Center operators, and network element specialists had developed their own sets of notes, procedures, and checklists that served as job aids.

Using all these sources, the experts in the writer-facilitated task analysis sessions were able to arrive at consensus about a best practice and prepare the material for input to Technical Information. Technical Information either incorporated this information into the appropriate existing document or organized and formatted it into a new controlled document.

A final draft of the Structured On-the-Job Training Guide for each network element was submitted to the same pool of subject matter experts for revision and approval. In general, revisions were minor, because they had all participated at some level in its creation.

At the end of the task analysis step, all the tasks from all network elements were integrated into one task matrix. In some cases, network elements shared the same task name: for example, the task "Perform triage" appears in the individual matrixes of three different network elements. In other words, the what is the same, but the how is different. The integrated task matrix shows such a task name once, with an abbreviated notation showing the elements in whose Structured On-the-Job Training Guide the mentor and learner would find the element-specific procedure.

In addition, we organized the tasks with a given duty according to the level of skill and experience required to do them. We defined three operator levels, with the novice Network Operation Center operator at Level 1 and the advanced Network Operation Center operator at Level 3. A total of 137 tasks are defined for the job of advanced Network Operation Center operator.

Once the tasks, procedures, and best practices were identified, it was relatively easy to create a set of checklists for mentors to use when training learners to perform a task. The Structured On-the-Job Training Guides with checklists were grouped in individual binders according to network element. These notebooks provided a place for the mentor and learner to record the learner's progress. We then piloted the structured on-the-job training for each element, using one of the subject matter experts as a mentor and a relatively experienced operator as a learner. At the conclusion of the pilots, we revised the Structured On-the-Job Training Guide wherever necessary to ensure clarity and consistency.

The performance standard for all tasks is "Learner can perform the task with 100% accuracy." For simple tasks, the learner might be able to perform to the required standard immediately following the initial instruction. For more complex tasks, learners might need: (a) time to "practice" the task in a lab or other offline environment, (b) time to study the resource material describing the task, (c) additional time with the mentor in coaching sessions, observing a third person doing the task, or in some other learning situation devised by the mentor. Most tasks are checked off in a single followup session.

The newly certified advanced Network Operation Center operators will serve as mentors for the novice and Level 2 operators in the Network Operation Center. To develop this initial set of certified people, however, we had to recruit mentors from the pool of subject matter experts on the network elements. We chose individuals who had not only the required expertise on each of the tasks, but also a demonstrated ability to teach others effectively.

One mentor per network element per Network Operation Center, plus one mentor per network element from the Network Assistance Center, participated in a one-day training session on how to use the Structured On-the-Job Training Guide and checklists, and on the communication and other skills required to be an effective mentor. We also gave the mentors a process for providing task and procedure feedback to the Technical Education and Technical Information groups so that we can keep the Structured On-the-Job Training Guide and procedural documentation up to date.

The mentors spent varying amounts of face-to-face time in structured on-the-job training with the learners, depending on the number and complexity of the tasks for that network element. Training time varied from 8 to 16 hours. In between face-to-face sessions, learners had telephone and e-mail access not only to their regional mentor, but also to the mentors assigned to the other Network Operation Centers for that network element.

Once a mentor was satisfied that a learner could perform a task with 100% accuracy, mentor and learner initialed and dated the checklist, and the mentor notified the learner's manager and Technical Education that the structured on-the-job training was completed.

As soon as the structured on-the-job training was launched, we began work on the certification process. As described above, to be fully certified, an operator must have completed certain classroom courses, served a specified amount of time on the job (at least six months), finished the structured on-the-job training, and demonstrated proficiency on the defined tasks. This evaluation of task performance was developed directly from the structured on-the-job checklists.

First, managers and subject matter experts from the pool determined which tasks would be certified. Although we defined, and trained operators to perform, all tasks, only the ones most critical to network operation were selected for certification.

The managers and experts also provided the necessary advice about which of the following three methods (or combination of methods) should be used for effective evaluation of a task:

The certifiable tasks for each network element were reformatted into certification tables listing the task, the evaluation method, and three columns marked "1st try," "2nd try," and "Not fully certified." If the technician being certified cannot perform a task with 100% accuracy after two tries, the technician is marked "Not fully certified" for the task, and the certifier completes a competency development and followup plan for additional coaching by the mentor on that task. Certification for such tasks was usually accomplished in a brief followup session.

At the start of any certification program, an important question to answer is, "Who certifies the certifiers?" It is our intention, of course, to use our newly certified operators to certify Level 1, Level 2, and new Level 3 (advanced) operators. To create this initial set of certified advanced Network Operation Center operators, however, we decided to maximize the use of our people resources by drawing certifiers from the mentor pool. These individuals were already familiar with the Structured On-the-Job Training Guide and best practices they used as they trained.

It was clear, however, that a mentor should not certify anyone that he or she had trained. We therefore arranged for mentors from one Network Operation Center to certify operators in another.

These people participated in a one-day training session on how to complete the certification tables and competency development plans, and on appropriate behaviors to use during the certification process.

For this first wave of certification we obtained the commitment of 48 certifier days. Using two certification teams of six certifiers each (one for each network element), we were able to certify the 24 advanced Network Operation Center operators within a single month. Certification sessions usually covered all of the duties and tasks for a given network element. The total number of tasks for a network element varied from 5 to 30 and sessions lasted between 2 and 8 hours. Given our average turnover rate in the Network Operation Centers, we expect that the newly certified operators will be able to keep up easily with new operator certifications.

The tracking system is a relatively simple, although large, database that includes the certification results for each individual and each task. There also are links to information about job experience and completion of technical and nontechnical classroom training. Managers provide the required input about job experience and classroom training completion, and certifiers provide the results of the certification process, including missed tasks.

The missed task information is an important part of the Technical Education and Technical Information groups' continuous improvement processes. If many individuals cannot perform a task accurately in two tries, there may be a problem with the task analysis, the procedures, the documentation, or the structured on-the-job training. Even tasks that frequently require a second try should be scrutinized for ambiguity.

The tracking system also produces simple competency profiles that give that Network Operation Center managers regular reports of the progress the operators are making toward full certification at the different levels.

We decided that the Technical Education group will be responsible for maintaining the Structured On-the-Job Training Guide and certification documents (tables, competency development plans, etc.), and that the Technical Information group will be responsible for maintaining the best practice documentation. This requires close communication between the two groups as input comes in from several sources, such as engineering, information systems, vendors, and security. We have designated one person per region to be the single point of contact for best practice changes coming from the field.

One of the greatest training challenges is to change on-the-job behavior. Most classroom training programs measure Level 1 learning (Kirkpatrick 1976)-essentially, how much the participants valued the training and what they thought they got out of it. Some programs use pre- and post-testing to determine how much of the training material was retained at the end of the training session (Level 2 learning). This certification program results directly in Level 3 learning-changed on-the-job behavior-and documented performance to a specified standard.

Although the program successfully achieved the original goal-building quality into the on-the-job performance of the advanced network operators-we also discovered a unexpected benefit of the Network Operation Center operator task matrix produced by the DACUM process. When the Engineering group plans new equipment or services, implementation teams can review the matrix and identify which Network Operation Center operator tasks will be affected by the proposed changes and what new tasks must be added. As a result, the Technical Education group receives notice of training requirements, and the Technical Information group receives notice of procedural documentation needs, well before the changes take effect in the field.

The demand for this kind of job and task analysis process is increasing within the company. Work groups that aren't considering certification are finding that the role clarity and procedural standardization that the process produces are effective tools in the work of achieving organizational excellence.

| |

| TQM Page | WizText Home |